Mission

Reconstructing an entire scene with only common-used devices like smartphones and digital cameras. No LiDAR allowed.

Answer

We tried many approaches, algorithms and input sources to start framing a satisfying answer. The problem is not perfectly solved but the next years will see great improvements for sure.

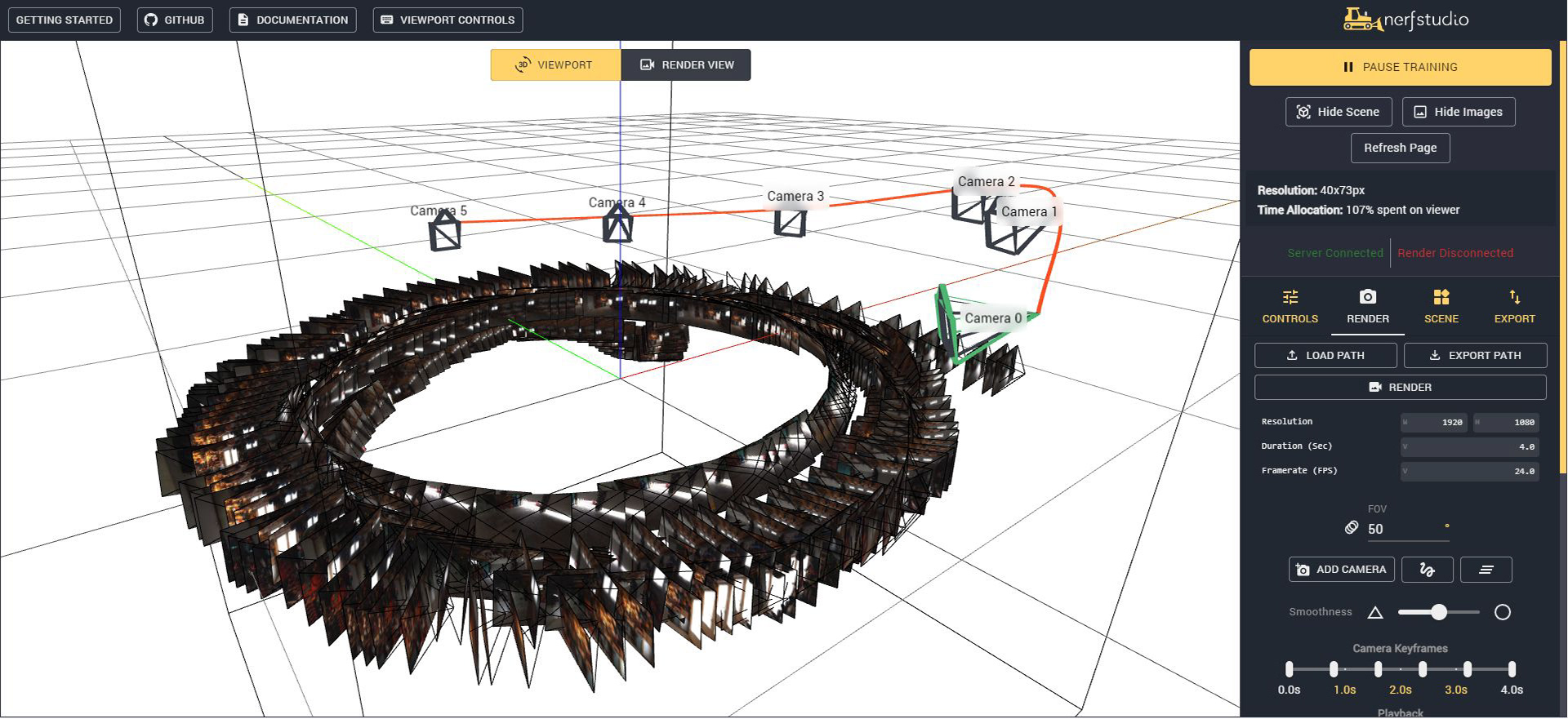

We accomplished the best 3D reconstruction using NeRF (Neural Radiance Fields). There are many variations of the NeRF logic, including Instant NeRF by nVIDIA, but the process is the same:

- we need good quality pictures: no motion blur, limited variation of brightness, limited noise

- decent GPU power

- we need good quality pictures: no motion blur, limited variation of brightness, limited noise

- decent GPU power

We tried all possible image collection techniques: standard pictures, 360, video... and the most practical and well-balanced between shooting time and processing time was taking a video with a fish eye lens. It required only a few minutes to take and with our automated pipeline, extracting good images from the video was another few minutes.

The missing step is how to go from a point cloud to a 3D mesh. The whole 3D world is based on meshes: we use them for walking over a floor, colliding with a wall, and interacting with a door handle.

Sadly, so far, the conversion from point cloud to mesh is unperfect.

In the meanwhile, NeRF has a great advantage as the points are not just color dots but they are little cloudlets that change color based on the angle they are seen. Hence, they can simulate reflection, refraction, and all texture subtleties.

Some experiments below: